User Interfaces (UI) in mobile apps and web apps are going through a “huge rethink” with the advent of LLM applications both in consumer and enterprise apps.

Traditionally, UIs have focused on providing users with clear and intuitive ways to interact with data and perform tasks. For example, a contact app should enable users to easily find a contact’s phone number through a simple search.

However, LLMs are changing this paradigm. By integrating LLMs, applications can understand and respond to user requests in natural language, whether through text or voice input. This allows for a more conversational and intuitive user experience. Instead of navigating UIs with multiple screens and menus, users can simply ask questions like “What is John Smith’s phone number?” or “Show me all contacts from work.” The LLM-powered app then processes the request and provides the relevant information. Maybe this is the first point where the UI comes into play, in the form of a contact card that displays the result of the user’s query.

Generative UIs prioritize user intent and dynamically adapt to provide the most relevant information and actions using on demand UIs. In an enterprise setting, for example, a dashboard powered by an LLM could allow users to ask questions like “What are the top three reasons for customer churn this quarter?” or “How does revenue compare to the same period last year?” The LLM would then analyze the data and present the information in a concise and understandable table or pie chart.

This paradigm shift moves away from the traditional focus on static UIs and towards more dynamic and personalized user experiences. LLMs empower users to interact with applications in a more natural and intuitive way, ultimately enhancing productivity and improving user satisfaction.

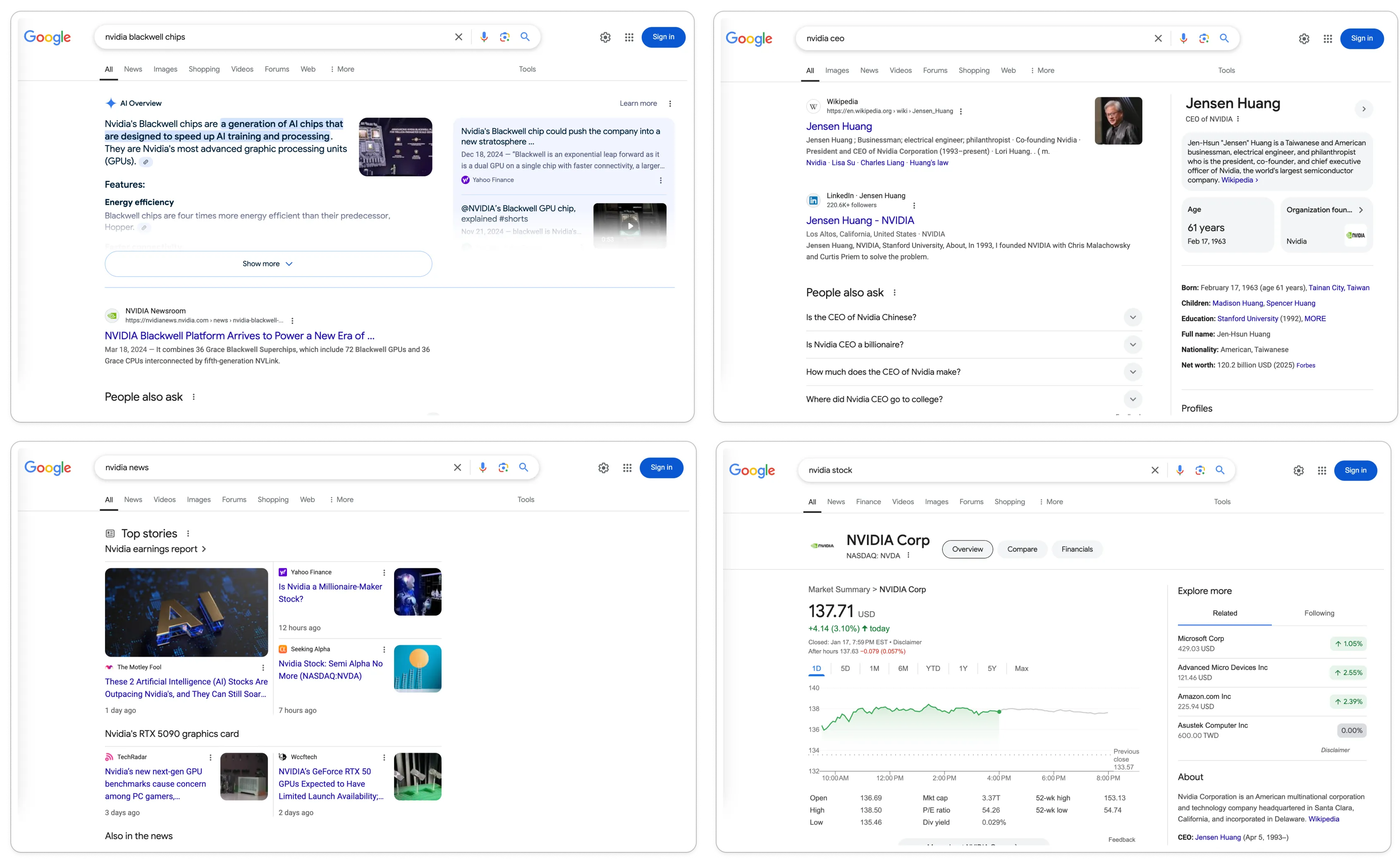

At the end of the day, Generative UIs just produce bespoke UIs. Bespoke UI is not entirely new. The difference now is that Generative AI has made building UIs like this so easy. Google Search, for example, has long been a pioneer in this area. See some examples below that illustrate how Google has been at the forefront of bespoke UI with search results. These dynamic results demonstrate how a search engine can anticipate user needs and deliver tailored information, effectively acting as a bespoke UI.

How are generative UIs implemented?

The underlying implementations vary depending upon how you look at it. But for the most part, they can be generalized to a tool call that the LLM does to pick the right UI for the right context and helps create the bespoke UI or generative UI. Generative UI leverages LLMs to dynamically generate and stream UI elements, often through tool calls and function calling. This involves integrating technologies like React Server Components and UI generation capabilities within a custom application framework.

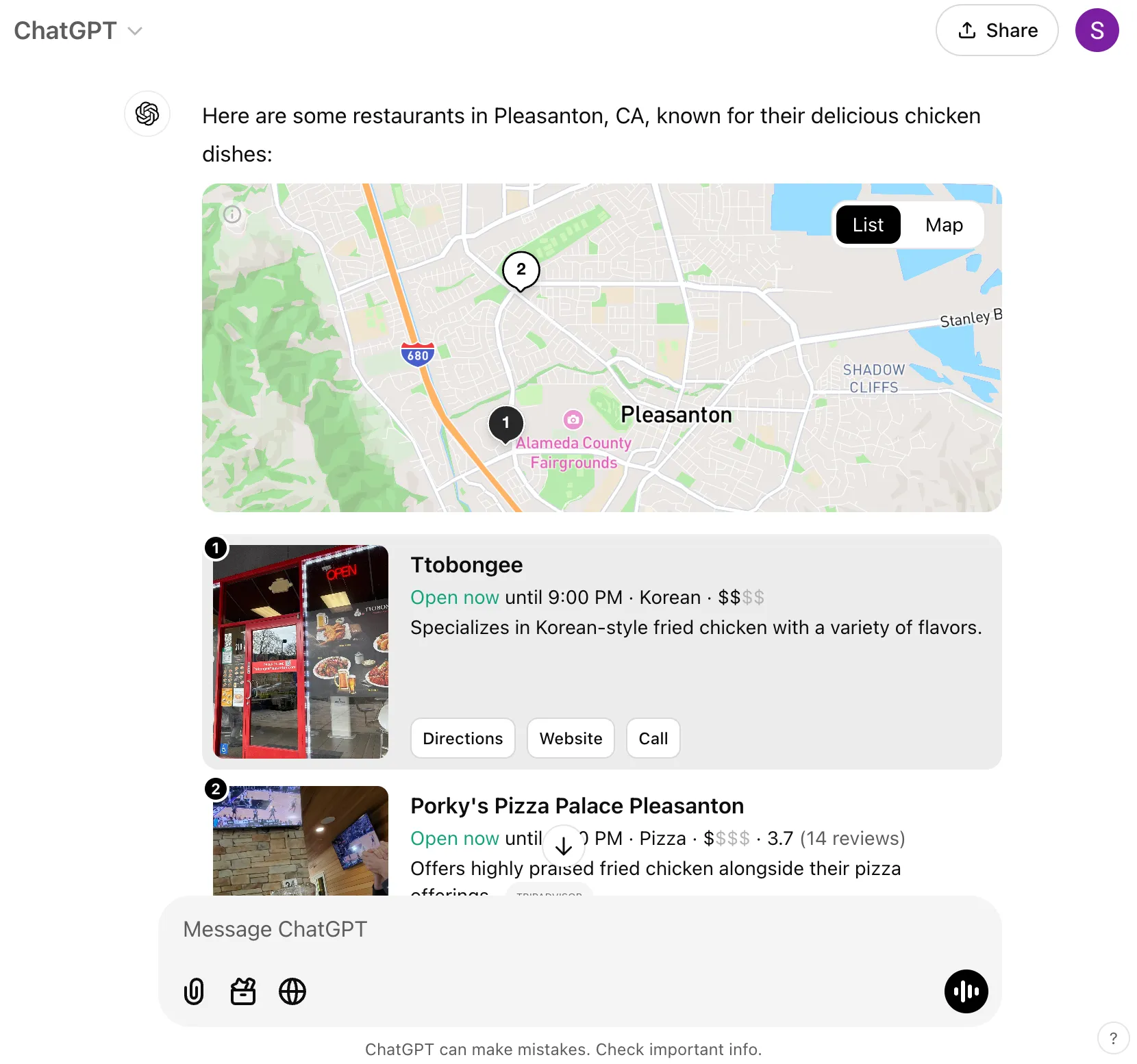

Below is a real example from ChatGPT

More reading on this topic

- https://uxdesign.cc/envisioning-the-next-generation-of-ai-enhanced-user-experiences-4fff46af7e6e

- https://www.nngroup.com/articles/generative-ui/

- https://vercel.com/blog/ai-sdk-3-generative-ui

- https://js.langchain.com/docs/how_to/generative_ui/

- https://www.youtube.com/watch?v=mL_KuQgX9Oc&ab_channel=LangChain