Running powerful language models locally on your laptop isn’t just a dream - it’s completely doable thanks to quantization. LLMs can be quantized and be fit in your Laptop’s GPU. Really!

LLM quantization is a technique used to reduce the size and computational requirements of LLMs while maintaining acceptable performance. It involves converting the weights and activations of an LLM from high-precision data types (typically 32-bit or 16-bit floating-point) to lower-precision formats (such as 8-bit or 4-bit integers).

Tools like Ollama and LM Studio have made this accessible to everyone, even without specialized hardware.

Take Ollama, for instance. Think of it as the “Docker for language models” - it bundles everything you need into a single “Modelfile,” making local AI deployment surprisingly straightforward. Installing it is as simple as downloading an app, whether you’re on Windows, Mac, or Linux. Managing models feels natural with simple commands like ollama pull to download and ollama list to see what you have installed.

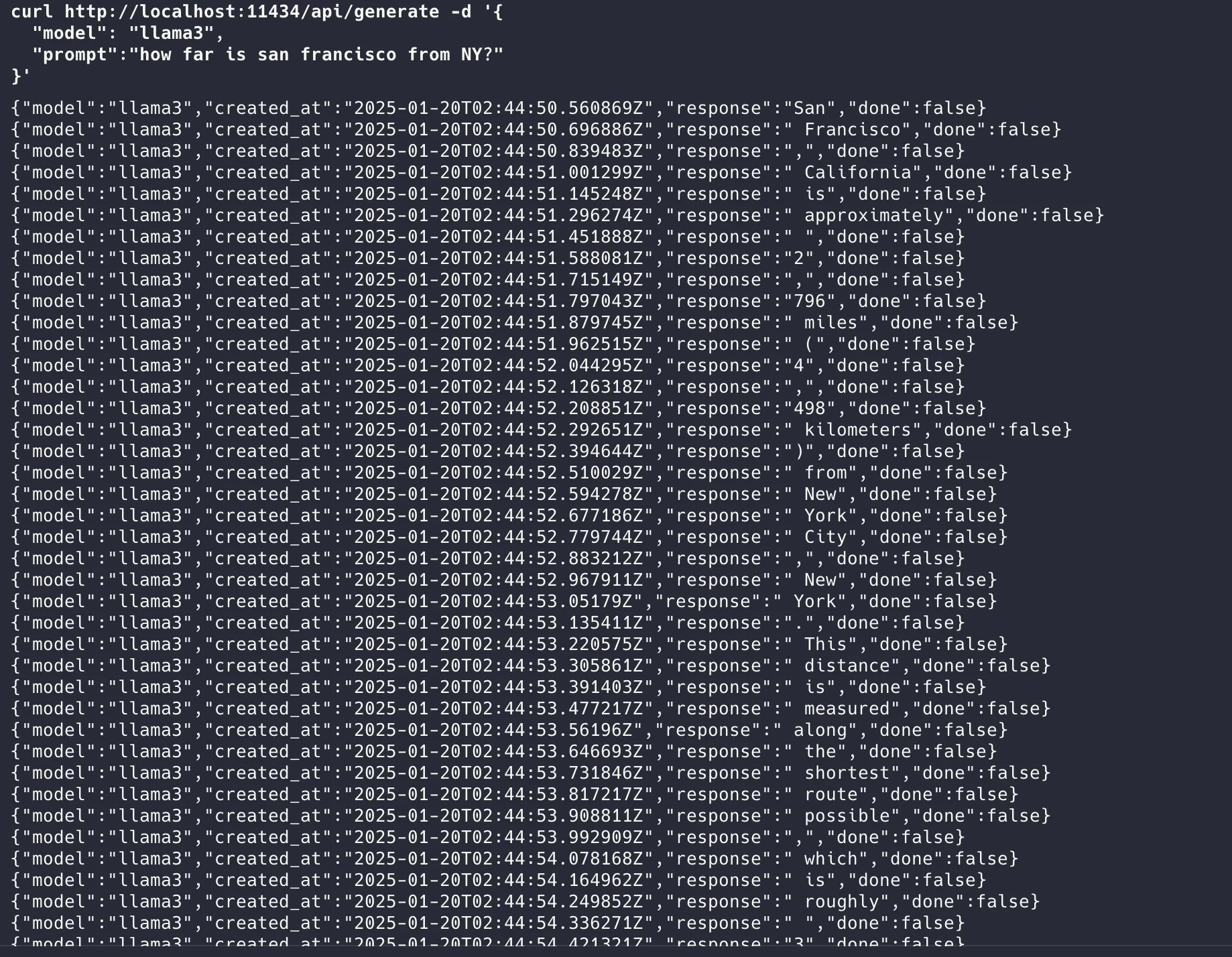

Under the hood, Ollama leverages the efficient llama.cpp library to run models on both CPU and GPU. It comes with a built-in REST API, making it easy to integrate with your applications. Since it runs as a background service, you can interact with your models anytime - no need to launch complex environments or juggle configurations. The whole experience feels remarkably polished, removing the traditional headaches of setting up AI models locally.

Below screenshot shows Meta’s Llama3 8B-Instruct running in my MAC M1. It is a 4 bit quantized version of the original 8B model.

Head over to github.com/ollama/ollama to run your favorite LLMs on your laptop.