Look no further — I’m going to take just 5 minutes of your time to explain how Llama 3 was built. Llama 3 from Meta AI is a good approximation for a typical LLM model in Q1 2025 and its my go-to model because its technical paper is comprehensive, covering everything we need to know.

When I say typical, I am specifically refering to Foundation LLMs. Foundation LLMs are generic LLMs for a variety of general purpose AI tasks. Think of summarization, translation, code generation, general knowledge, math solving etc.. You can also think of these as your infrastructure or foundation upon which your specific use cases can be built in the form of Generative AI apps (ex: chatGPT, Cursor) or AI agents..

TL;DR

How LLMs are built?

Broadly speaking, LLMs are built through a series of steps within these two main phases:

- Pre-training phase

- starting with defining a neural network;

- then feeding it with lots and lots of data;

- repeating this process over and over again;

- Post-training phase

- finally giving them feedback to make sure LLMs responses are aligned with human interests.

1. Pre-training phase

Lets start from ground zero. In this step, a few things happen such as defining the LLM (neural network), finding the data for training, preparing the data for training and then performing the Pre-training.

1. Defining the LLM (neural network)

Let me explain this in simpler terms. All modern LLMs are neural networks. A specific deep neural network architecture called the Transformers is widely used for these models because it’s incredibly good at tasks like understanding language and predicting the next meaningful word in a sentence. So “Defining the LLM” step is nothing but deciding what architecture to use and what hyperparameters to use to create the LLM - the neural network.

Think of hyperparameters as the settings or specifications for these neural networks. These do not change during the training process. Some examples of hyperparameters include size of the model, learning rate, max output size, context window size (the amount of tokens the model can “see” at one time as input to understand and make predictions) etc..

you ask: what are tokens? A token refers to a unit of text that is treated as a single element by a language model. Tokens can be as small as characters, subwords, or entire words, depending on the tokenization method used.

For those of you hearing Transformers or neural networks for the first time, I would highly encourage you to review 3Blue1Brown’s video series here.

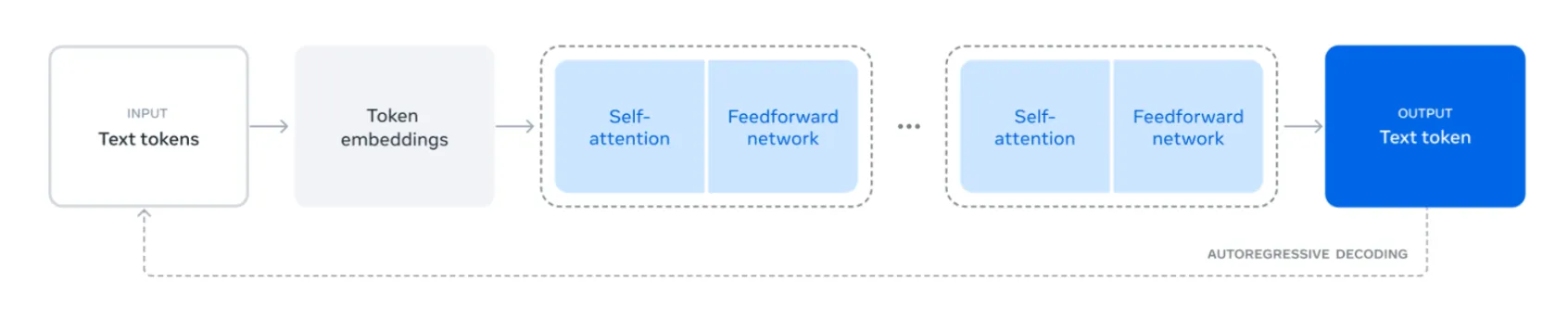

Now that we understand what happens in Pre-Training, lets look at the Llama 3’s pre training details. Ripped out of the technical paper, below is an illustration of the Llama 3 architecture.

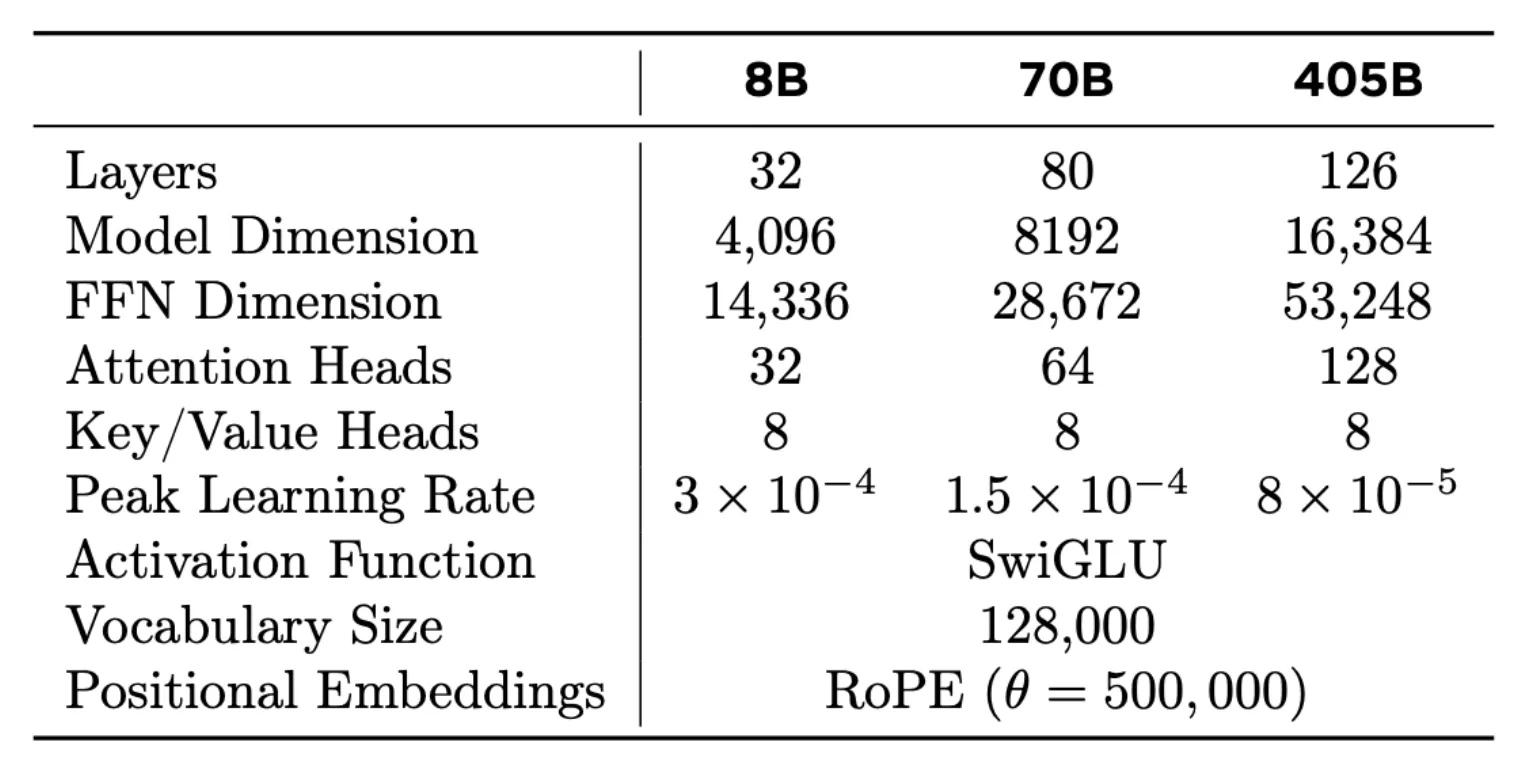

Hyperparameters for Llama 3 ends up looking like this the one in the below table (source Llama 3 technical paper).

Let me explain each hyperparameter in this table:

Layers: This indicates the number of transformer layers in the model. Layers are vertically grouped neurons that process information.Model Dimension: Also known as hidden size or embedding dimension, this represents the size of the vectors used throughout the model. Each token (word, character, or subword) processed by the LLM is represented as a vector in a space with a size equal to the model dimension. For example, if the model dimension is 4,096, each token is represented as a 4096-dimensional vector.

you ask: what are embeddings? LLMs are neural networks which are like huge and complex math equations if you will. They cannot directly read text, they need to be numbers. That is where the process of embedding comes in. Text embedding is a process where text (words, sentences, or entire documents) is converted into a numerical vector (a list of numbers). These vectors capture the semantic meaning of the text, making it easier for models to understand and work with text.

FFN Dimension: The Feed-Forward Network dimension, which is the size of the intermediate layer in the transformer’s feed-forward networks.Attention Heads: The number of parallel attention mechanisms in each transformer layer. Starting at 32 heads for the smallest model and going up to 128 heads for the largest, allowing for more parallel processing of different aspects of the input.Key/Value Heads: This remains constant at 8 across all models, representing the number of heads used specifically for key and value computations in the attention mechanism.Peak Learning Rate: The maximum learning rate used during training. It decreases as models get larger which is typical as larger models often need more careful optimization.Activation Function:SwiGLUis listed as the activation function used across all models. This is a variant of the GLU (Gated Linear Unit) activation function known for good performance in transformers.Vocabulary Size: Set at 128,000 for all models, this represents the number of unique tokens the model can recognize and generate.Positional Embeddings: Uses RoPE (Rotary Positional Embedding) which is a method for encoding position information into the model’s representations.

Meta developed a range of models with different sizes, measured by the number of parameters. Parameters in neural networks primarily refer to the weights and biases that the model learns during training. Each connection between neurons has an associated weight, which is a parameter. Each neuron (except typically those in the input layer) has a bias, which is also a parameter. Typically, total parameters is the sum of count of weights and count of biases. The three models—8B, 70B, and 405B—are scaled versions of the same architecture. The size impacts how powerful the model is.

Sample neural network that explains the concept of parameters

If a neural network has 3 layers. Lets say, 1 input layer, 1 hidden layer and 1 output layer with 4 neurons in each layer. Then typically the number of parameters in this model is computed as 40. These 40 parameters will be learned by the model as part of the model training process. You can think of the number of layers, what algorithm to use for making the model learn etc.. as hyperparameters.

- Count of weights = (4 * 4) + (4 * 4) = 32. input layer’s four neurons connect with each neuron of the hidden layer that makes up the first

4 * 4and then hidden layer’s four neurons connect with each neuron of the output layer that makes up the second4 * 4 - Count of biases = (4 + 4) = 8. remember, the input layer doesnt have a bias so each of the neurons in the hidden layer and output layer has a bias

# of parameters = Count of weights + Count of biases = 32 + 8 = 40

2. Preparing the data to train

Now that we have defined the model, lets look at the process of getting the data it needs. The data used to train a model must be carefully curated and balanced. In Meta’s case, they used 15 trillion (15T) multilingual tokens.

To train the model, these 15T tokens were generated from an enormous dataset sourced from the internet and fed into the neural network. The goal is for the model to learn enough about language, context, and patterns to generate meaningful responses to new unseen user inputs (this process is often referred to as next-token prediction).

Meta trained Llama 3 using data collected from various sources on the internet, with the data being up-to-date as of December 2023 (this is referred to as the cutoff date). Before training, the data went through a cleaning process to remove duplicates, personal information, and other unnecessary elements.

Since much of the data came from web pages, Meta created a custom parser to efficiently process and extract information. They also used two types of tools for filtering and cleaning the data:

- Fast and simple text classifiers to identify and filter out bad or repetitive data.

- Llama2-based models for more robust classification, such as by assigning scores to documents and deciding their relevance.

Specialized pipelines were developed to handle specific types of data, such as math and code, separately. To ensure a balanced dataset for training, Meta decided on the following mix of content:

- 50% general knowledge tokens

- 25% mathematical and reasoning tokens

- 17% code tokens

- 8% multilingual tokens

This careful curation of data helped create a model with a well-rounded understanding across different areas of knowledge. Now that we have prepared the data, lets move on to the actual training in the pre training phase.

The exact original data set size of the data used for Llama 3 training is unknown. But its estimated to be about 45TB of data.

3. Training in Pre-Training phase

Imagine this process as if you were accelerating a human brain’s learning from infancy to 30 years old in a matter of months. The brain would “experience” vast amounts of information, gaining knowledge and understanding as it processes it. This is essentially what happens with the LLM. It “sees” and “learns” from a massive amount of data to develop its knowledge and generate intelligent responses. For example, after this training process, if you ask, “What is the square root of 16?” the model should understand the question and respond appropriately. Keep in mind, this isn’t just retrieving an answer from a database—this is more comparable to human intelligence. The LLM “thinks” by activating a complex network of artificial neurons and functions, similar to how a human brain processes and responds to questions.

Training Compute Power

The speed and efficiency of training a model depend heavily on the compute power available. Compute is often measured in FLOPs (Floating Point Operations Per Second). For example, Meta used 3.8 × 10²⁵ FLOPs to train their 405B model on 15.6 trillion text tokens. In AI, scaling up the compute power (FLOPs), the size of the model (parameters), and the amount of training data (tokens) often leads to better results. However, scaling requires careful budgeting because compute power is expensive. Meta followed “scaling laws” to determine the optimal size of their model based on the available budget and compute resources.

Llama as a Multimodal Model

Llama is a multimodal model, meaning it can process not just text, but also images, audio, and videos. To achieve this, Meta used separate encoders for each modality:

- Image Encoder: The model was trained on image-text pairs, where each image is linked to a natural language description. This teaches the model to associate visual content with textual meaning.

- Speech Encoder: A self-supervised learning technique was used. Some parts of the speech data were intentionally removed, and the model had to reconstruct and interpret the missing parts. This helps it learn speech patterns and language understanding.

To integrate these different inputs (image, audio) into the main language model, Meta used an adapter with cross-attention layers. This bridges the gap between the modality-specific encoders and the core language processing capabilities of the model.

AdamW was the learning algorithm used in pretraining.

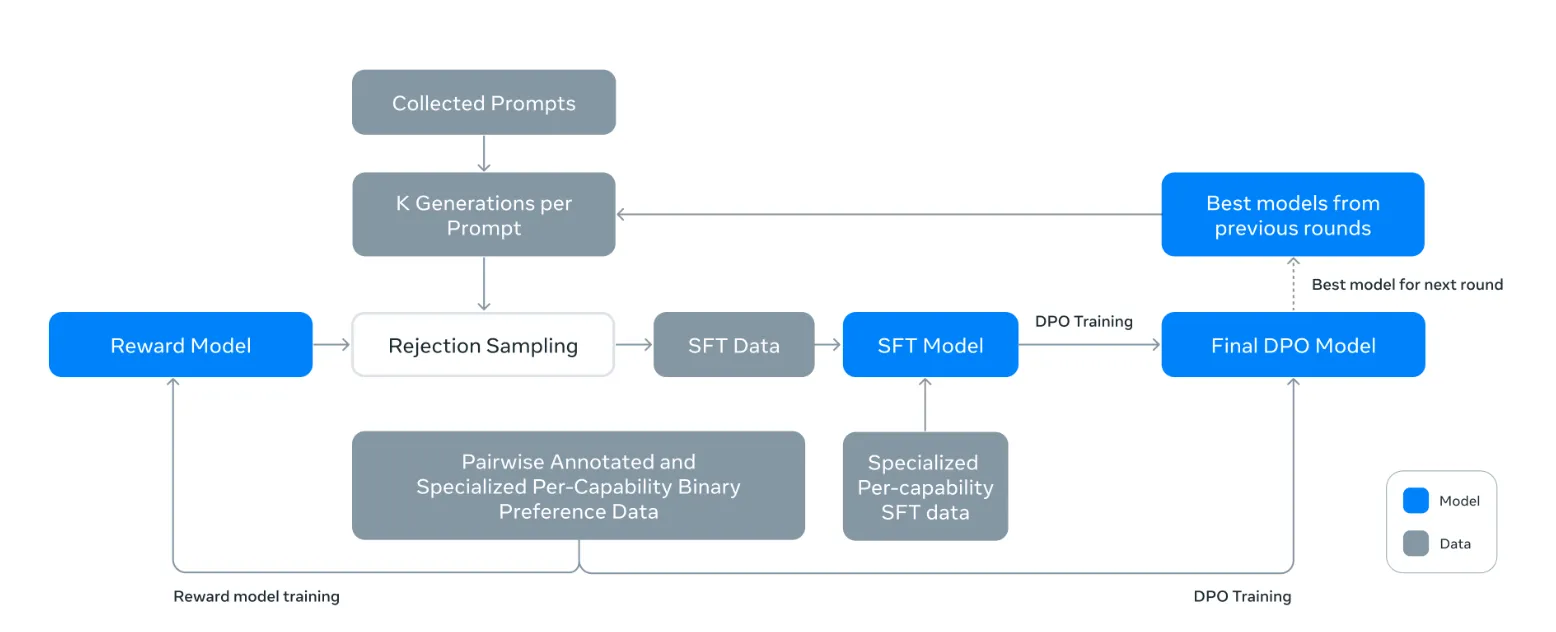

2. Post training

This is where we tackle the alignment problem—at least partially. The goal is to teach the model to behave appropriately and give responses that are useful and practical. For example, in Meta’s approach, they used Supervised Fine-Tuning (SFT), which means improving the model using real human feedback. At this stage, the model is also trained to handle specific tasks, like generating code or using tools effectively. Meta’s approach of using a reward model and then combining it with SFT and channeling it through Direct Preference Optimization is detailed out below.

As part of post training, Task specific items are trained to improve on specific tasks such as reasoning, coding, tool use etc..

One particular thing I want to highlight is the tool use. Tools expand the portfolio of the LLM by giving them access to systems that they can leverage to perform tasks or answer questions.

Remember in Meta’s case, the knowledge cutoff was Dec 2023. But in the real world, if you ask the LLM what is today’s weather to the LLM, it should be able to answer it. This is where tool use comes into play. In the case of Llama 3, it is trained to use Brave Search to answer questions about recent events. Llama 3 is also trained to use other tools like a python interpreter or Wolfram API for math etc..

A bit about Hardware

Llama 3 was training on the following hardware. Imagine the scale and cost associated with it.

- 16K H100 GPUs

- These GPUs were hosted on servers in groups of 8 (along with 2 CPUs in each one of them). That makes it 2000 servers.

- The interconnect data transfer speed between these GPUs where 400 Gbps

Benchmarking

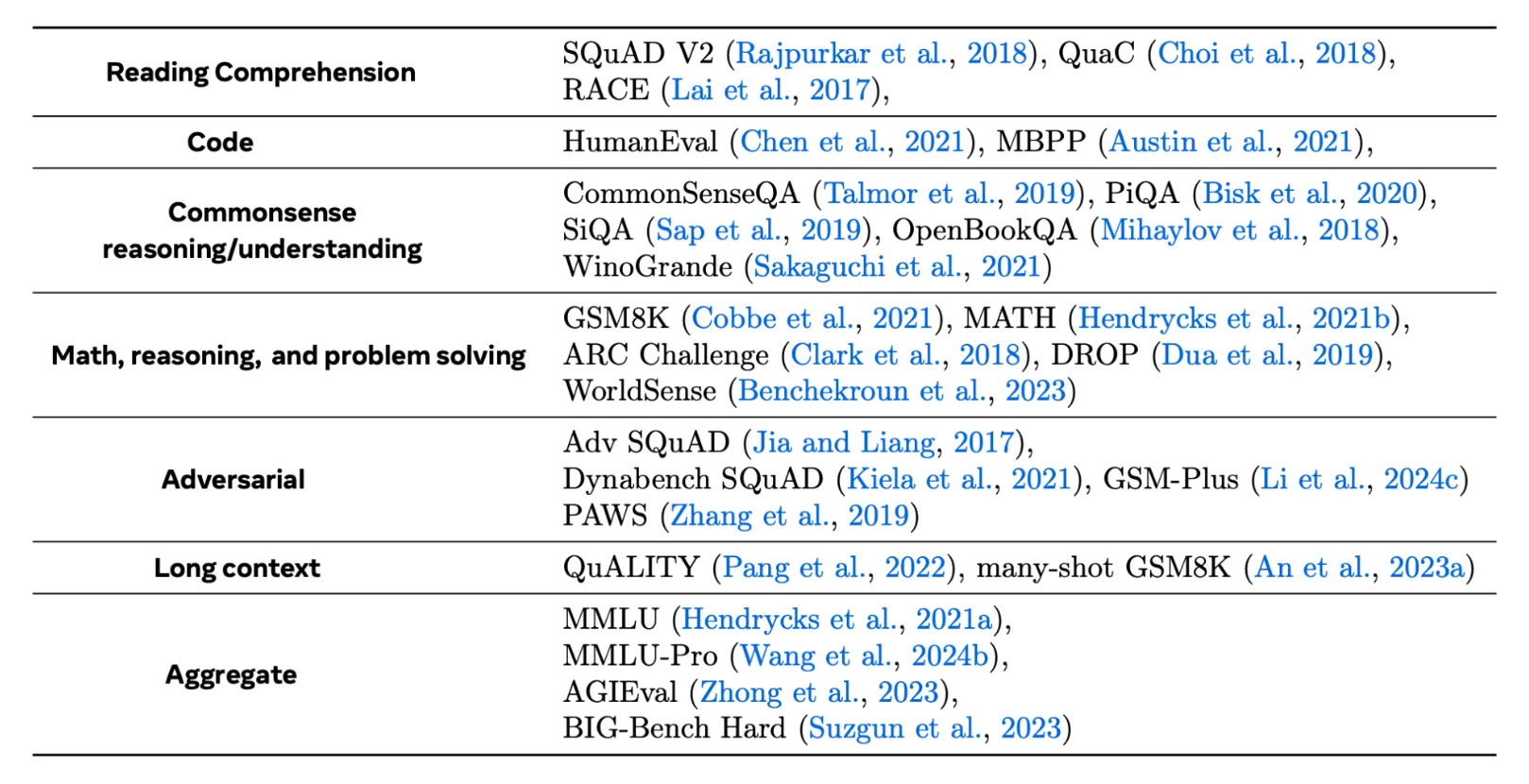

After Post training, LLMs also go through a Benchmarking exercise to just report out real world performance evaluations on a bunch of tasks. Evaluations shown in the below table were done for Llama3.

Read the full Llama 3technical paper from Meta here. I’m thankful for the groundbreaking work by Meta’s AI research team. Its about 70 pages of content if you remove the citations. I wanted to share this because, if you haven’t had the chance to read it yet, now is the perfect time to dive in. With advancements like Llama 4 and even more powerful LLMs on the horizon, staying ahead of the curve has never been more crucial.

Hopefully this answers the question “Have you wondered how a typical LLM is built?”