So over the weekend, I found myself in that familiar coding zone again, the one where time just disappears and you’re completely absorbed in building something cool. This time around, I was itching to play with Llama 4’s multimodal features, so I ended up vibe coding a makeup recommendation app.

Why Makeup? (And Why My Wife’s Sephora Struggle Sparked This Idea)

Okay, so this might sound random coming from someone who spends most of his time building tech products, but there’s actually a pretty specific story behind this.

A few weeks back, I was waiting outside a Sephora store while my wife was trying to find a makeup product. What should have been a quick 15-minute trip turned into over an hour. When she finally came out, she was frustrated and honestly a bit defeated.

Here’s what happened: she’d spent forever swatching different shades on her hand, trying to figure out which one matched her face. The lighting in the store made everything look different. The sales associate was helpful but swamped with other customers. She ended up buying two different shades because she couldn’t decide, figuring she’d return the one that didn’t work.

Plot twist: neither of them worked perfectly when she tried them at home.

This whole experience got me thinking. Here we are in 2025 & we have AI that can identify objects in photos, analyze facial features, even generate art from text prompts. We can literally ask our phones to recognize songs or translate languages in real-time. But my wife still has to play this expensive guessing game with hundreds of makeup shades?

Watching her go through this frustration made me realize, why can’t we just take a selfie and get told exactly which makeup brand’s shade would work? The technology exists. The problem is real. Someone just needs to connect the dots.

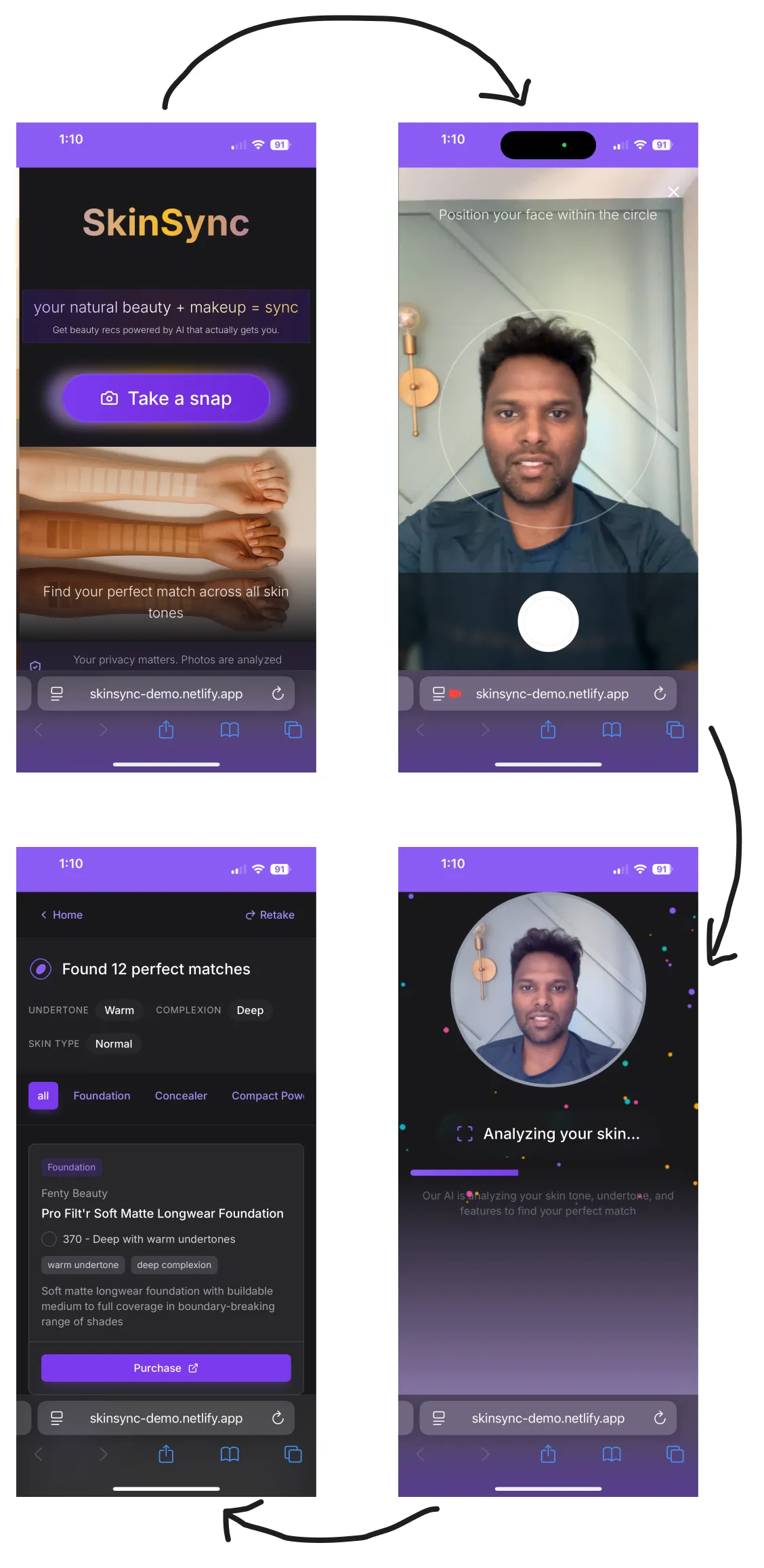

That’s how SkinSync was born. Yes, SkinSync is the name of the app (had to be creative, you know). See the app in action below:

It starts by asking you to upload a selfie. Then it uses AI (Llama4 API) to analyze your skin tone, undertones, and complexion. Then it uses an inventory of products (that Perplexity AI agent sourced from the internet) to recommend the best makeup products in the best shade that works for you.

The Weekend Build Breakdown

Let me walk you through how this actually came together, because it was honestly one of those builds where everything just clicked.

Starting with Bolt.new (And Why It’s Actually Amazing)

I’d been hearing about Bolt.new from other developers, but I’ll admit I was skeptical. BTW, I actively use Cursor for my side projects. So my thought was; Another AI coding tool? Sure. But, was I wrong about this one.

Bolt.new isn’t just another code generator, it’s more like having a really smart developer sitting next to you who happens to work at 10x speed. You give it detailed specs (and I mean detailed. I wrote what felt like a small novel describing exactly what I wanted), and it builds you a complete, working application.

My prompt was basically a technical requirements document. I specified how the Llama 4 integration should work, what the user flow needed to look like, how the data should be structured, everything. You can check out the full prompt here if you’re curious about the level of detail I went into.

What blew my mind was that within maybe 10 minutes, I had a fully functional React app with Supabase already configured. Not just boilerplate code; it was an actual working application with a clean UI and proper architecture. Supabase was the database and edge function provider.

But here’s the thing, it’s not magic and it’s not perfect. Bolt.new occasionally gets stuck, especially with more complex integrations. When it kept going in circles trying to set up the Supabase edge function, I had to step in and debug it manually. That’s where having an engineering background really helped. And having tech background helps you to know what to ask the AI to do and also equally understand what tools or platforms to use. For example, why use Supabase edge functions?

The Supabase Edge Function Dance

Quick sidebar on why we needed the edge function in the first place. You can’t just call the Llama API directly from the frontend. Doing so would expose your API keys to anyone who knows how to open dev tools. Not great for security or your API bill.

So the edge function acts like a middleman. The frontend sends the image to our Supabase function, which then securely calls Llama4, processes the response, and sends back the analysis. Clean, secure, and it keeps everything server-side where it belongs.

Making Llama 4 Actually Useful (Prompt Engineering is Everything)

Here’s where things got really interesting. Getting Llama4 to analyze a photo and spit out useful makeup recommendations isn’t as crazy as you’d think. The difference between getting back a useless paragraph of text and getting structured data you can actually work with comes down to prompt engineering.

I needed Llama to:

- Look at the uploaded selfie and analyze skin tone, undertones, and complexion

- Consider factors like lighting conditions and photo quality

- Return everything as structured JSON (not a paragraph of prose)

- Be consistent across different types of photos

The prompt engineering took a few iterations to get right, but once I nailed it, the results were surprisingly accurate. You can see the full prompt here – it’s basically like writing really specific instructions for a very literal-minded assistant.

The Data Problem (And How Perplexity Saved My Weekend)

Okay, so now I had a working app that could analyze photos. Great! But it was recommending… nothing. Because I had no product data.

In the old days, this would have meant spending hours manually researching makeup brands, cataloging products, figuring out which shades work for which skin types, basically the kind of tedious work that makes you question your life choices.

Instead, I decided to see if I could get AI to do this grunt work for me. Enter Perplexity.

I treated Perplexity like a research assistant. I gave it a detailed brief: go research major makeup brands, find their foundation/concealer/powder products, analyze the shade ranges, categorize them by skin type compatibility, and format everything according to my database schema. Oh, and export it as a CSV so I can directly import it into Supabase.

What happened next was honestly magical. Perplexity spent about 5 minutes doing what would have taken me an entire day (maybe more). It browsed brand websites, analyzed product information, organized everything into the exact format I needed, and handed me back a perfectly structured CSV with hundreds of products.

The full prompt is here if you want to see how I structured the request, but the key was being really specific about what I needed and how I needed it formatted.

The Polish Phase (Where I Probably Got a Bit Obsessive)

Bolt.new’s first attempt was honestly pretty impressive, but you know how it is, you start nitpicking little things, and before you know it, you’ve spent way more time than planned perfecting details.

I ended up going through about 6 million tokens (yeah, I tracked it) of back-and-forth with Bolt.new, tweaking the UI, adjusting the user flow, fixing edge cases. The final result is a React app that actually feels polished.

The Result

SkinSync ended up being exactly what I envisioned. It became a clean, responsive web app that works great on both mobile and desktop. You upload a selfie, get an AI analysis of your skin, and receive personalized makeup recommendations.

The whole thing is hosted on Netlify with automatic deployments from GitHub, because why make deployment harder than it needs to be?

Try it out: SkinSync App

Check the code: GitHub

What I Learned (The Stuff That Actually Matters)

This build taught me a few things that I think are pretty relevant for anyone building products right now:

AI tools are incredible force multipliers, but they’re not magic. Bolt.new and Perplexity saved me probably days of work, but they still needed human guidance. Knowing when to step in and when to let the AI run is becoming a crucial skill.

Prompt engineering is basically product design for AI. The quality of your output is directly proportional to how well you can communicate what you want. It’s like writing user stories, but for AI.

The data collection game has completely changed. Instead of manually gathering information, you can now have AI agents do research for you. But you still need to know what questions to ask and how to validate the results.

Rapid prototyping is getting ridiculous (in a good way). Going from idea to working prototype in a weekend means you can test assumptions and get feedback way faster than ever before.

Appendix

Technical Architecture

For those interested in the technical details, here’s how SkinSync is structured under the hood:

Frontend: React with Vite for fast development and optimal bundling

Backend: Supabase edge functions handling AI API calls and business logic

Database: Supabase PostgreSQL storing product catalog

AI Integration: Llama 4 for image analysis and recommendation logic

Deployment: Netlify for seamless CI/CD from GitHub

I understand that there is more to a large scale recommender system, but for the scope of this project, this is a small scale matching algorithm that is good enough to demostrate the overall concept.

Prompts

The magic of this build really came down to effective prompt engineering. Here are the key prompts that made everything work:

- Perplexity Prompt for Data Gathering - View Full Prompt

- Bolt.new Prompt to Build the App - View Full Prompt

- Llama 4 Prompt to Analyze Photos and Return Structured Responses - View Full Prompt

Tools Used

- Llama 4 Maverick

- Perplexity (acting an AI research agent)

- Bolt.new

- Supabase (PostgreSQL database)

- Supabase edge function (serverless functions in cloud)

- React

- Netlify

- Github